Why Power BI Needs a Data Warehouse: Limits, Risks, and How to Scale Analytics

It is widely accepted that Power BI can quickly provide an excellent analytics solution for organizations. Within a few days, a proof of concept (PoC) can be created and a final solution plan can be developed. This may then initiate a project to integrate the source data, which can also be completed rapidly.

However, there are concerns about whether this approach is feasible, secure, and of sufficient quality for all management needs.

This is where a data architect, data steward, or BI competence center must step in to ensure these requirements are met.

Reports and analyses should be accurate and based on valid, trusted data. The approach taken to achieve the highest level of security, quality, and consistency is crucial.

The fast implementation approach

Typically, there are one or more sources that you want to connect to with Power BI. Power BI has extensive tools for connecting and integrating source data (ETL). The advantage is that a single employee or expert team can use these integrated tools to prepare the data and build and populate the dimensional model.

Typically, there are one or more sources that you want to connect to with Power BI. Power BI has extensive tools for connecting and integrating source data (ETL). The advantage is that a single employee or expert team can use these integrated tools to prepare the data and build and populate the dimensional model.

When does it work?

- If you have simple data sources or just a single source.

- If you have a low volume of data.

- If you do not need much historical data (few slowly changing dimensions).

- If only limited data preparation with fields, tables, or joins is required.

- If you have a simple, easy-to-understand data model with one to three star schemas and a few non-cascading dimensions.

- If you have relatively few lookups.

- If you have decided against a Data Vault modeling approach.

This may work well initially. But as project requirements increase and time goes by, the complexity usually increases significantly.

When doesn’t it work?

Even if only a few of the above points do not apply, a completely different set of requirements for your data preparation will be needed. The need for a data warehouse, data lake, or data lakehouse increases.

With a simple formula, you can estimate the complexity of a data warehouse:

Complexity Factor = “Complexity of the source data” × “Requirements of management” × “Duration of the project”

The result is a complexity factor that translates into required time, resources, skill level, and the need for a consistent, modern analytics architecture. This illustrates the immense complexity that can arise. The complexity of the business model is often a good evaluation factor for the complexity to be expected in the analytics model.

Threats to your Power BI project

Power BI has limited modeling capabilities

Power BI has limited modeling capabilities

- Power BI has limited capability to implement historization concepts. It is therefore vital to implement snapshot and slowly changing dimension (SCD1, SCD2, Snapshot) historizations in a data warehouse, allowing you to query all historical data states efficiently in Power BI without reloading the data. This helps you get the most out of your analytics solution.

- The popular Data Vault 2.0 modeling technique cannot be directly implemented in Power BI without a data warehouse.

It is extremely important to implement historization concepts that record master data changes seamlessly, for example using timestamps and new records whenever a column value changes.

This can be handled automatically by an efficient data warehouse such as AnalyticsCreator.

As an example:

Salesperson A was responsible for Italy in 2022 and for France in 2023. If you run a report in 2023 about Salesperson A’s sales over the last two years by country, information about Italy would be lost in a non-historized model, and all sales would incorrectly be attributed to France.

The data warehouse historization concept monitors all relevant columns of all tables for changes and creates a new record for each change. Power BI then queries the correct history. The more master data you have, the more complex this becomes and the less feasible it is to handle directly in Power BI.

Advanced analytics models are therefore not possible in Power BI alone without a modern data warehouse. The performance of Power BI would be limited. Historization concepts for a data warehouse involve additional effort, which can be reduced significantly by using data warehouse automation software such as AnalyticsCreator.

Performance and storage space

If you are loading large amounts of data, it makes sense to do this in a data warehouse, where data can be stored cost-effectively and prepared for Power BI with high performance. It is also much easier to connect new data sources in a DWH and link them to existing structures than in Power BI itself.

If you are loading large amounts of data, it makes sense to do this in a data warehouse, where data can be stored cost-effectively and prepared for Power BI with high performance. It is also much easier to connect new data sources in a DWH and link them to existing structures than in Power BI itself.

Limited data preparation options

Power BI does not provide as many options as a dedicated ETL or data integration tool to prepare data. Advanced transformations, reusable logic, and complex workflows are typically easier and more maintainable in a data warehouse or ETL layer.

Separate data storage from the frontend tool

The purpose of a Data Warehouse, Data Lake, or Data Lakehouse is, among other things, to separate data storage and preparation from the frontend tools. In this architecture, Power BI is used to access and visualize data, loading only the data required for reporting into its in-memory storage.

A DWH usually contains much larger data volumes than any single Power BI model. This architecture is recommended by most experts. It also makes it easier to connect other tools—such as planning applications, consolidation software, or additional analytics tools—to the same trusted data platform.

If you perform complex data preparation directly in Power BI and later add other tools, you will have to repeat the same logic multiple times. Instead, the complexity of data preparation should be handled in the DWH, so that the self-service BI approach can be better implemented in the frontend. Department users can then access the DWH and build their own reports without heavy IT involvement. The rule is: do not prepare your core data in the Power BI layer.

A Data Analytics Platform (DWH, Data Lakehouse, Data Mart) can be created in Azure (SQL DB, tabular, etc.) using Azure Data Factory or on Microsoft SQL Server using SSIS ETL processes.

High risks

For management, it is a high risk not to implement a Data Analytics platform (Data Warehouse), as important key data can be lost and never reactivated. Data is a crucial asset for any business, and it is essential to capture, store, and analyze it to make informed decisions.

Without a data analytics platform, data can be lost or scattered across multiple systems, making it difficult to access and analyze. This can lead to missed opportunities, inaccurate insights, and uninformed decisions. Key data that could have been used to identify trends, forecast outcomes, or optimize business processes may be lost, directly impacting the company’s performance.

Furthermore, if data is not stored securely and is prone to errors or manipulation, it can lead to compliance and regulatory issues. For example, in regulated industries, not having a secure and auditable data platform can lead to legal implications and fines.

Therefore, it is crucial for businesses to adopt a data analytics platform to manage data effectively and mitigate the risks associated with data loss, inaccurate reporting, and regulatory issues.

The bottom line

Global studies by analysts, universities, and consulting firms report that at least 80% of the time in a business analytics project is spent on data preparation, and only about 20% on the frontend. It makes sense, therefore, to choose the right architecture and tools to get the most value from this effort.

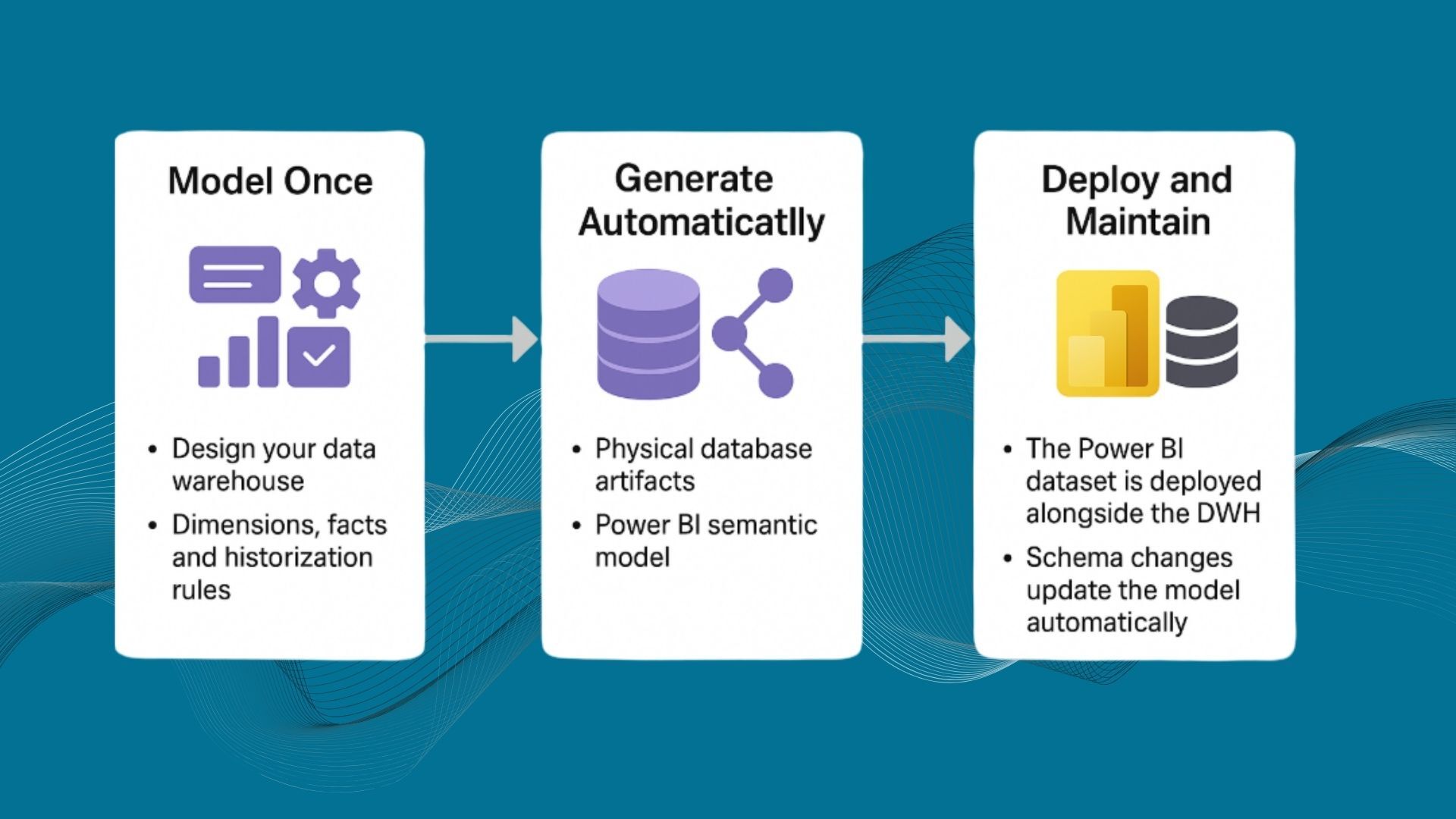

If you invest so much time in data preparation, it is logical to use a data warehouse automation tool like AnalyticsCreator to get best practices, the right architecture, and the necessary speed. You will be able to apply advanced analytical methods, deliver results faster, enhance your data knowledge, and reduce complexity and dependency on manual processes.

Frequently Asked Questions

Is Power BI alone enough for enterprise analytics?

Power BI is excellent for visualization and quick prototypes, but for complex, large-scale, or historical analytics, you generally need a data warehouse or data lakehouse behind it.

When can I safely use only Power BI without a data warehouse?

Using only Power BI can be sufficient if you have a small data volume, a single or very simple source, minimal historical requirements, and a simple dimensional model with few joins and lookups.

Why does Power BI struggle with historization and slowly changing dimensions?

Power BI is not designed as a full ETL or data warehouse engine. Implementing robust historization (SCD1, SCD2, snapshots) is difficult and quickly becomes unmanageable without a proper data warehouse layer.

Can I implement Data Vault 2.0 directly in Power BI?

No. Data Vault 2.0 requires a database and ETL/ELT framework. Power BI can consume Data Vault–derived marts, but it cannot act as the Data Vault storage or transformation layer itself.

Why should data preparation be done in a data warehouse instead of Power BI?

Centralizing data preparation in a DWH avoids duplicating logic across tools, improves governance, supports multiple frontends, and keeps Power BI focused on analytics and visualization instead of heavy data engineering.

How does a data warehouse improve Power BI performance?

A DWH pre-aggregates, cleans, and structures data, reducing model complexity and data volume in Power BI. This improves refresh times, query speed, and overall user experience.

What are the risks of not using a data analytics platform?

Key risks include data loss, scattered data, inconsistent KPIs, compliance issues, inaccurate insights, and an inability to audit or trust management reports.

How can AnalyticsCreator help in a Power BI environment?

AnalyticsCreator automates data warehouse modeling, historization, and ETL/ELT code generation. It builds a robust backend for Power BI, reducing manual work, improving data quality, and enabling advanced analytics at scale.